Final Project

An immersive art installation

An - Poonam

TL;DR: you can jump in to see our demo video here.

Project objectives

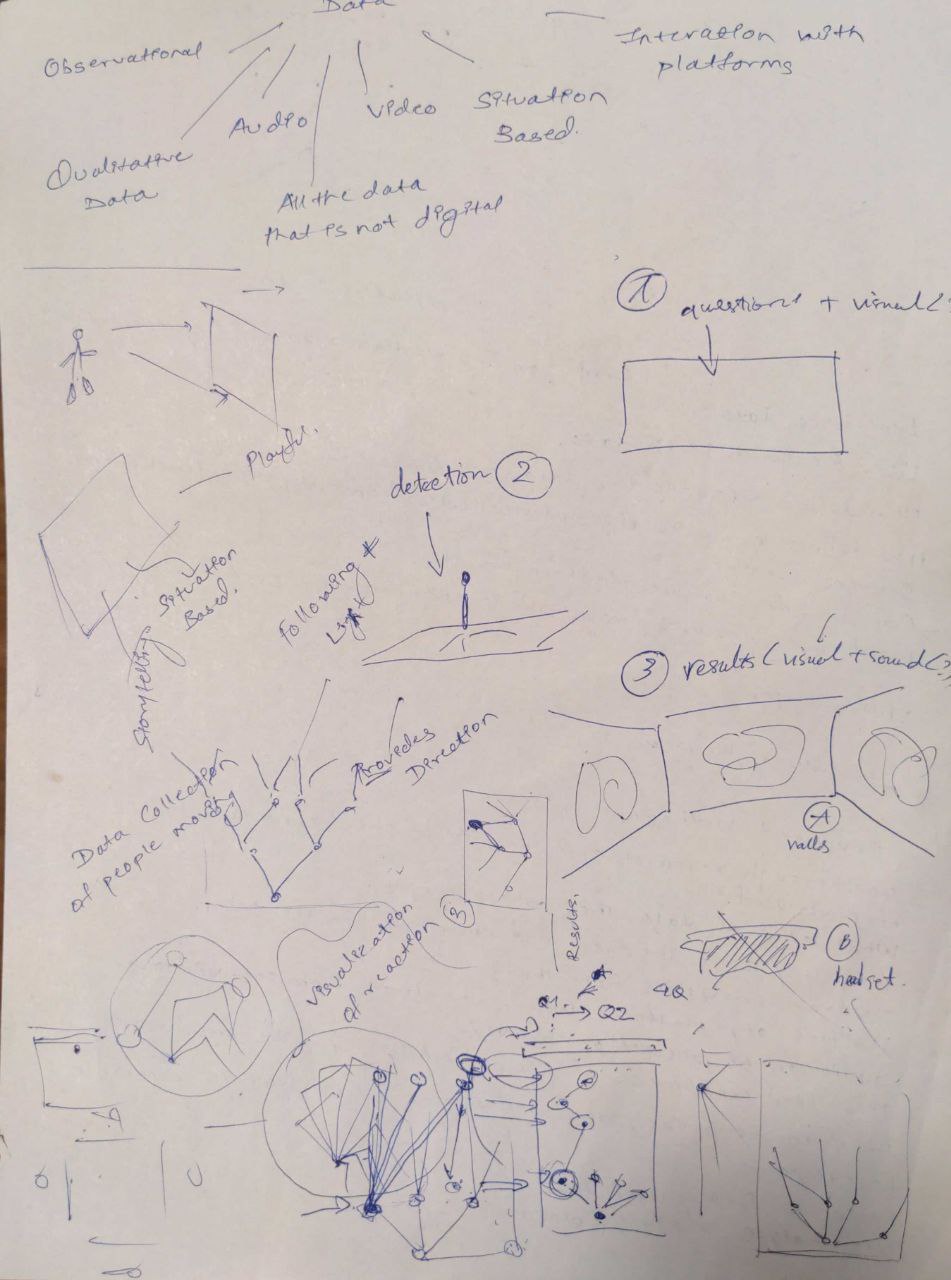

After having my initial idea generated (it was about catching participant’s emotions and showing it as audio-visuals), the project was joined by Poonam and together, we developed and adjusted the idea according to the feasible tools and venues that was avaible for us.

Using embodied interactions to build a new kind of storytelling game experience.

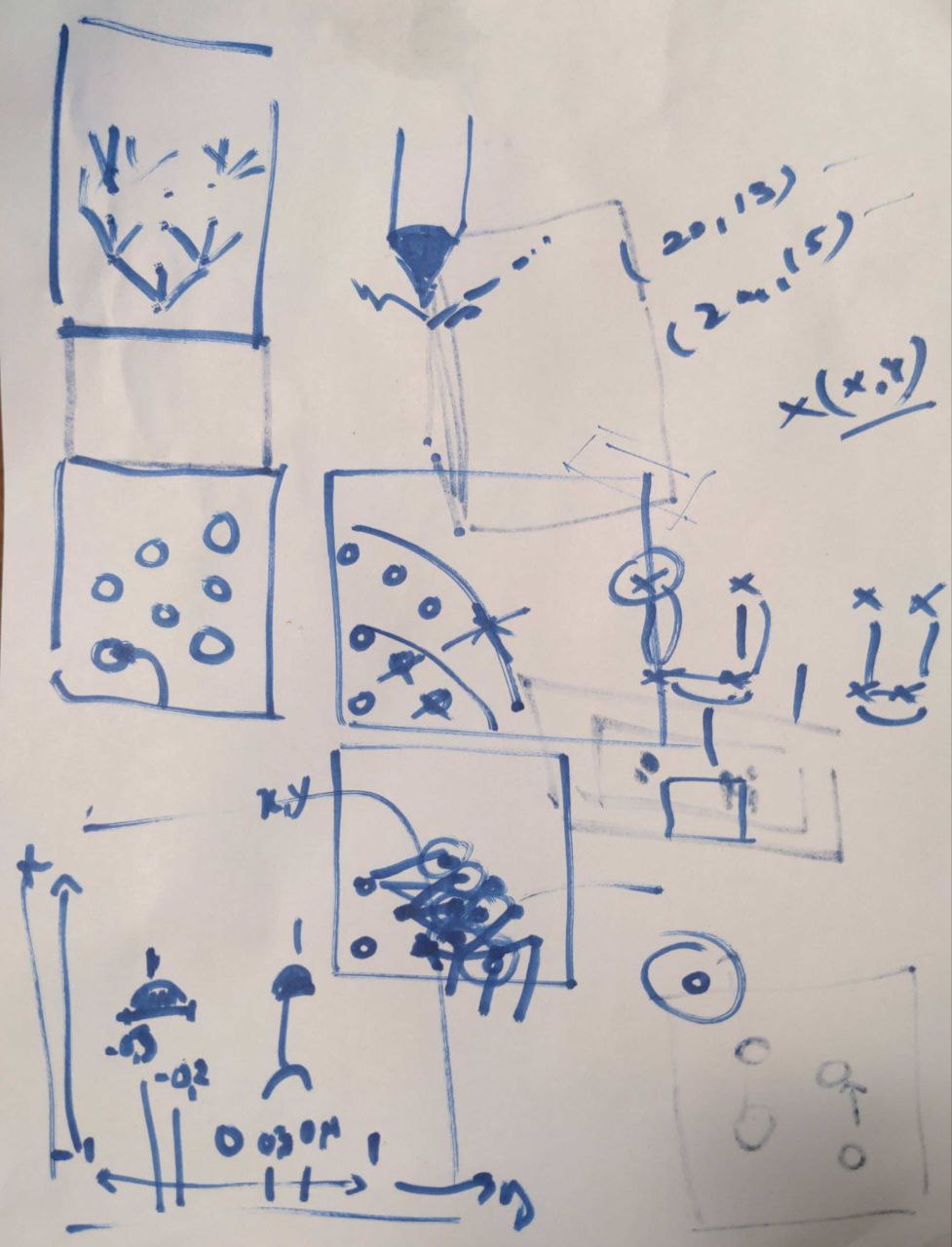

Throughout the course, we’ve learnt to work with different applications and approaches in terms of integrating betwenn embodied interaction and audio-visuals. Based on my original idea of detecting and changing audio-visual based on the participant’s movements, we were constantly trying and testing new features in the project.

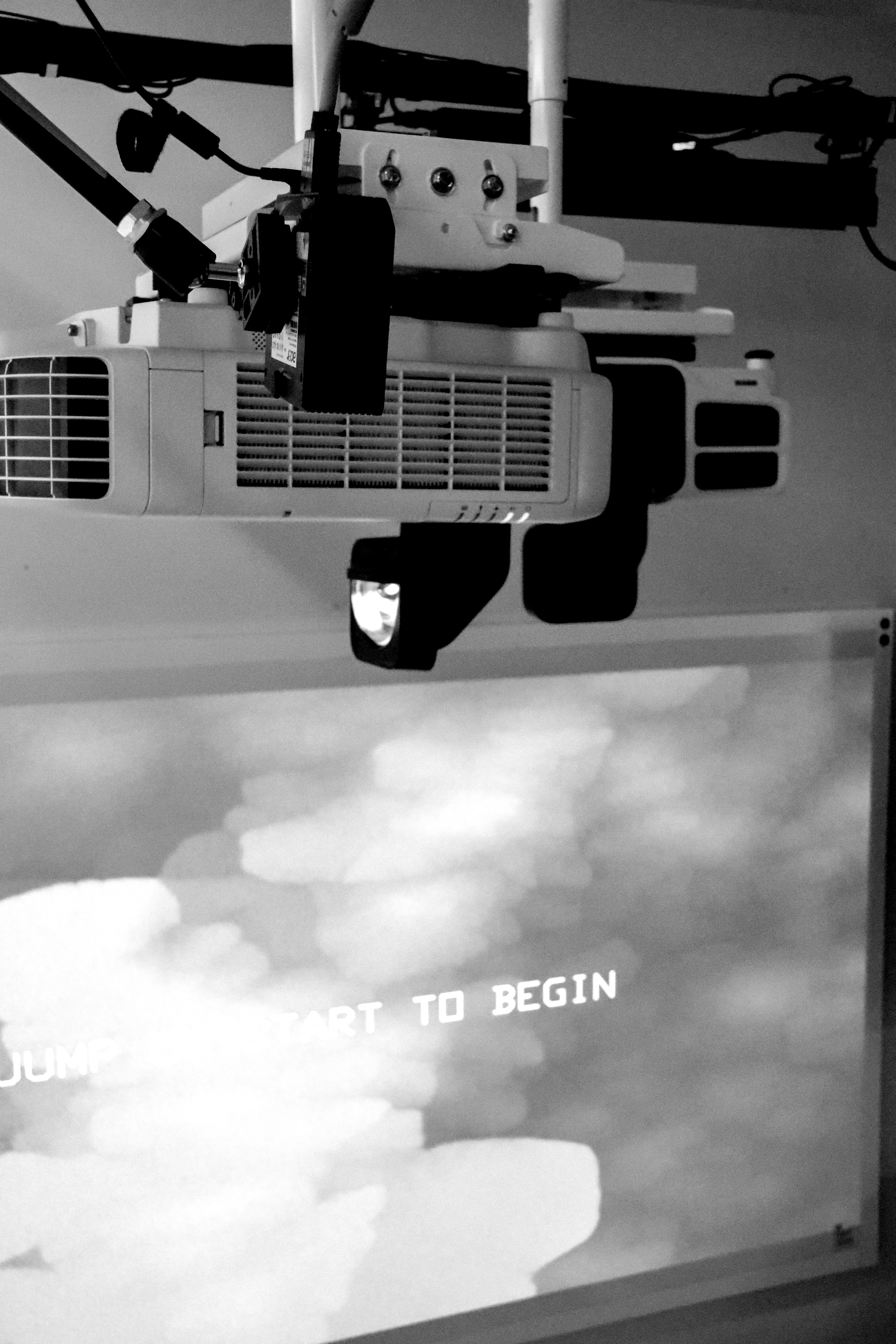

The starting/ stand on screens, background music’s on playing continously

The fundamental elements that we decided should be included were:

-

Telling a story that either invoke emotions, educational or requires thinking and interacting from the participants.

- Tracking the participant’s locations in the room and their body gestures & converting it into commands in the game.

- Changing the audio-visuals based on those commands.

Tools and methods

In order to bring the idea to life, we were working with:

- A seperated room with 2 screens for an immersive experiment.

- A mapped floor with instruction or guidances throughout the game.

- A Kinect Azure - a body tracking device to define and convert body gestures into commands.

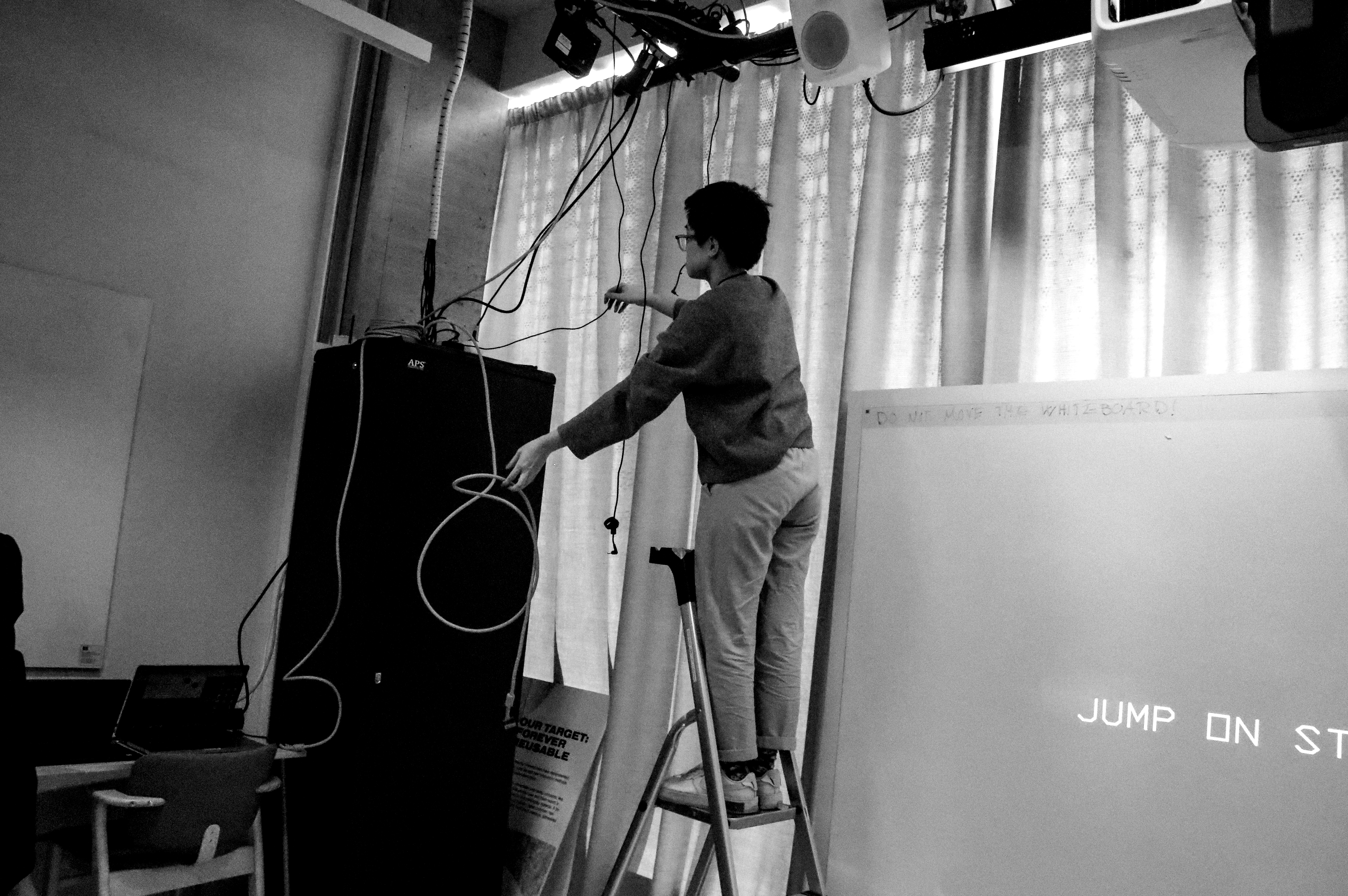

- 3 projectors to draw the icons on the floor and 2 more for projecting the big screens.

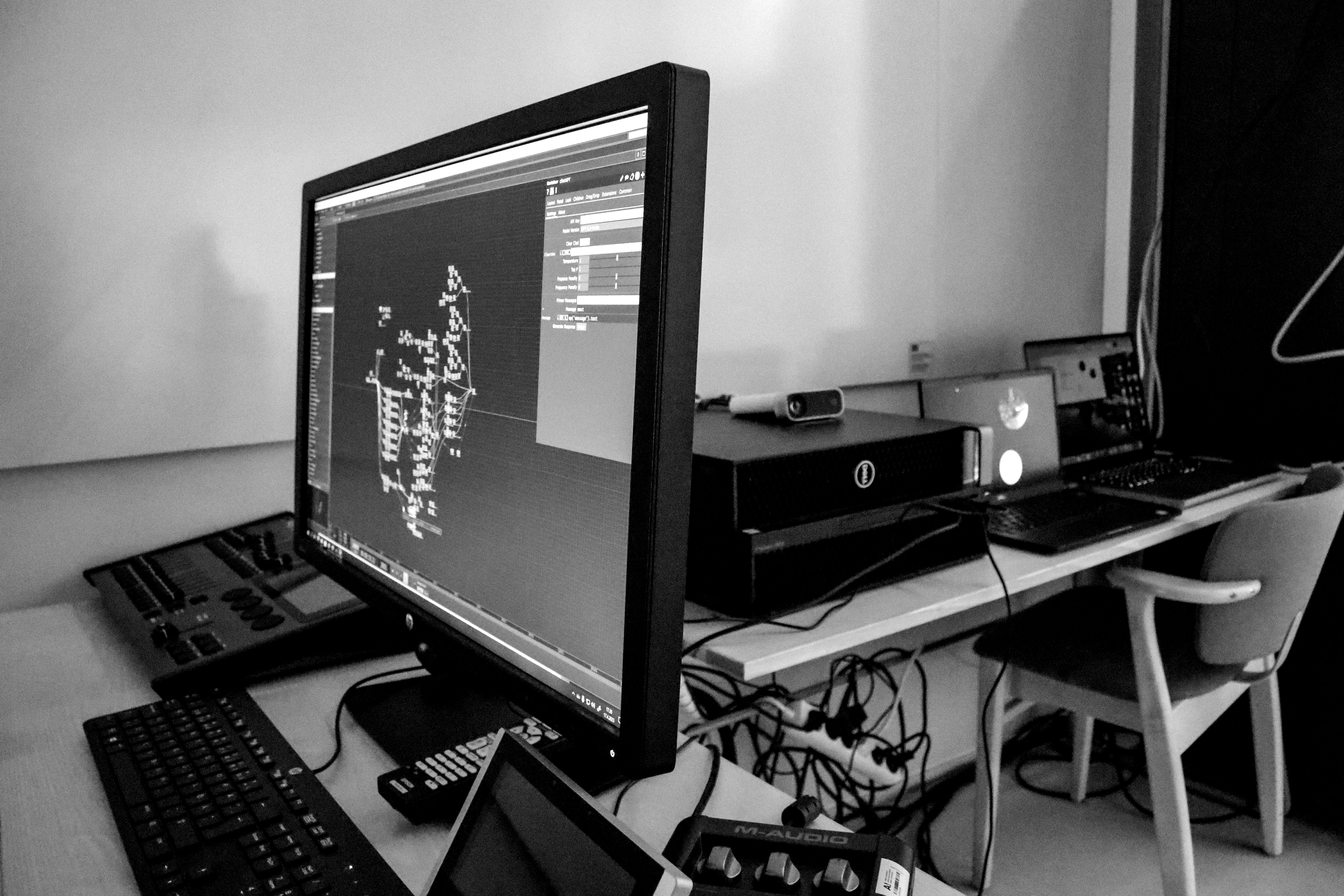

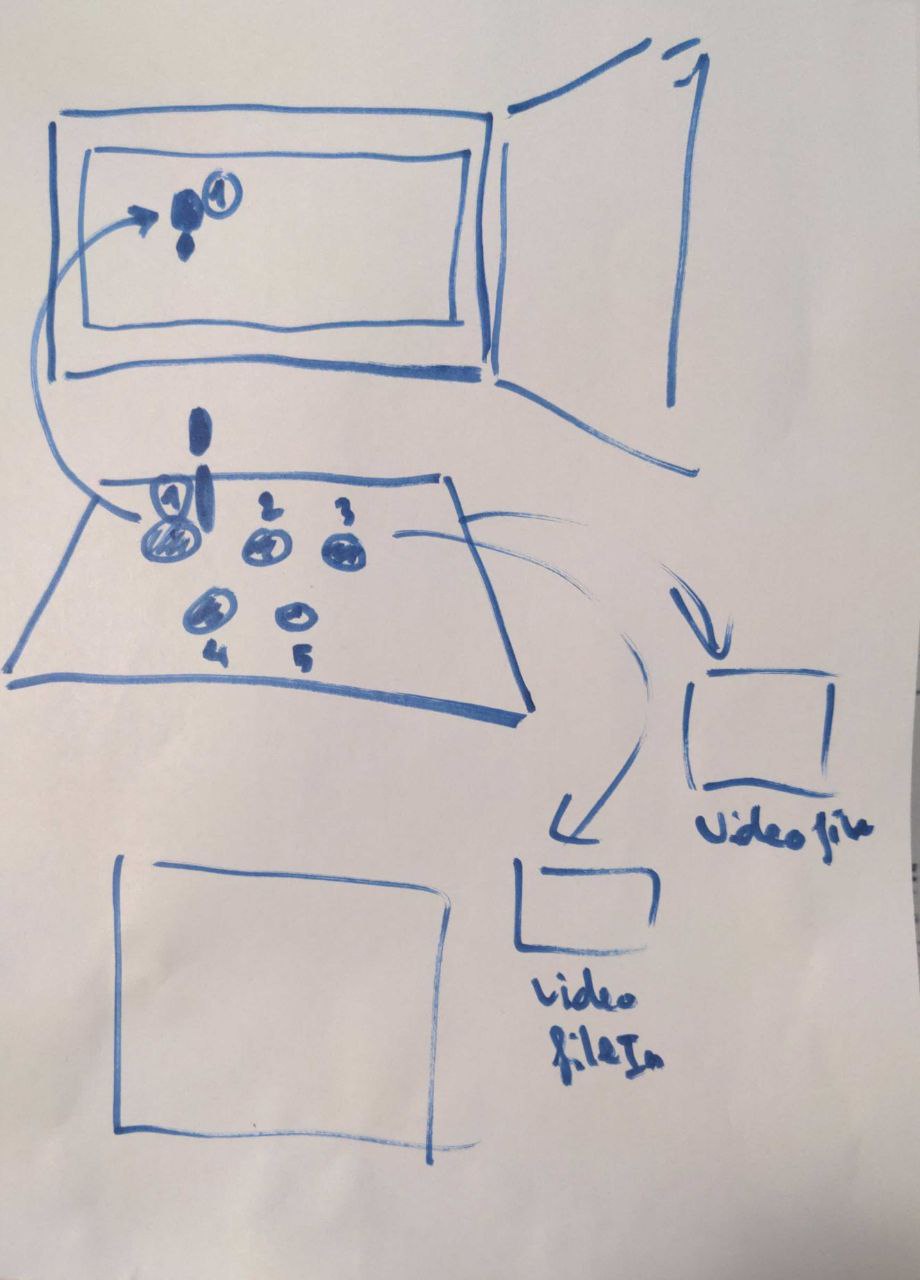

In total, 3 different projectors were installed for mapping the floor, 1 audio interface and 1 mircophone to get the participant’s voice to the system, 1 Kinnect was located at waist level for the best possible capture of the participant locations, 2 laptops and 1 desktop were needed to work simultaneous during the play.

Touchdesigner was used as the main software to create and control the interface. As this is my very first time working with the software, most of the time were spent to watch tutorials and read about the software’s features and exploring them. The most difficult part to get through was how to get the visual changing based on different positions that are detected by the Kinnect. At first we planned to have at least about 12 positions, but as the story development changed and given the small size of the room, we narrowed it down to 6 positions.

The arrangement inside Touchdesign file has 3 foundamental parts: setting range of the positions list and put them in changing orders, managing the different scenes that sequentially changed based on the positions in screens 1 and 2. Background sounds, voice-over and ChatGPT conversation were added in the last minute to enhance the experience.

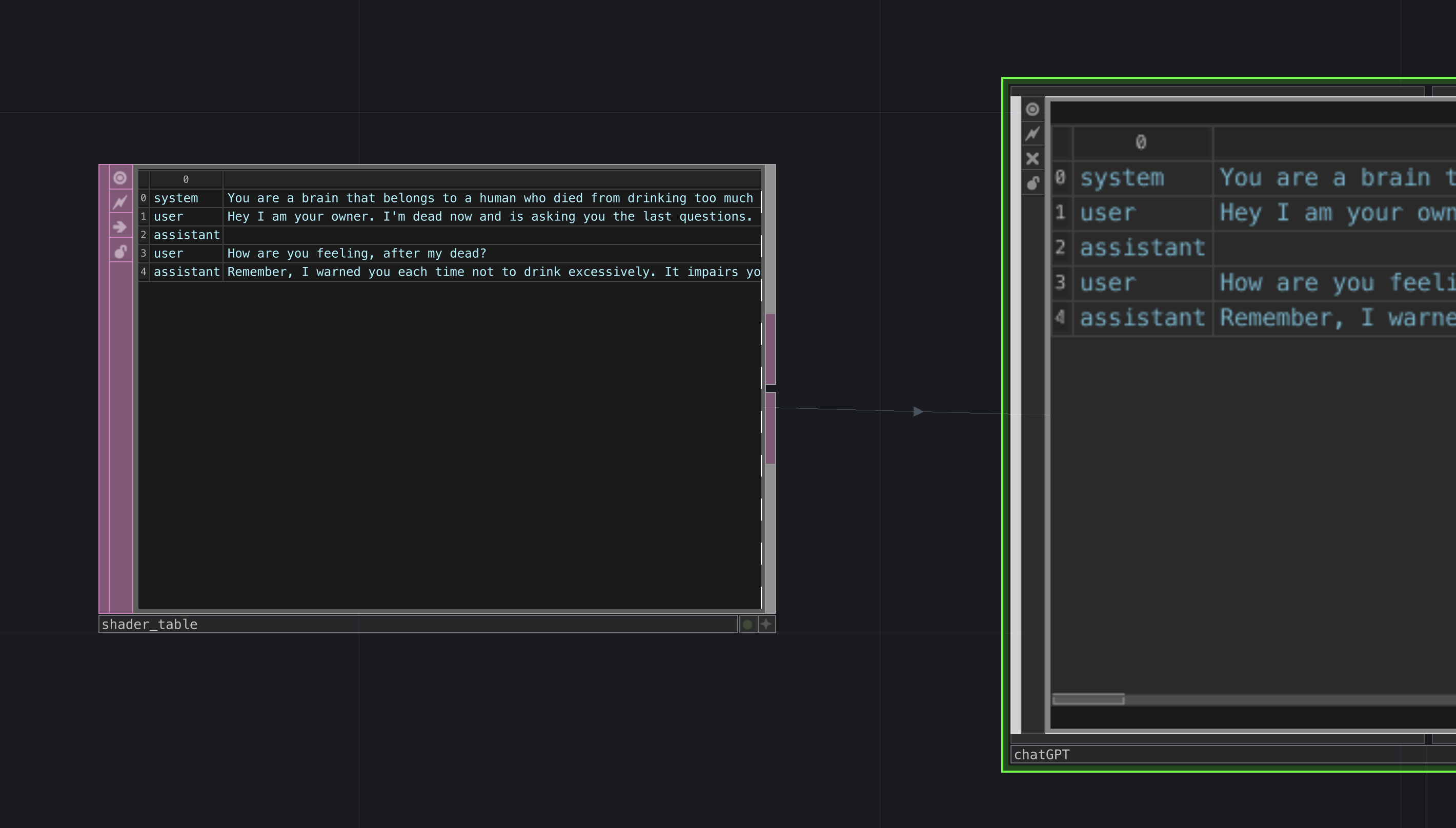

For the Touchdesigner chatGPT plugins, credits goes to Torin Blankensmith, who created a wonderful tutorials and files that only needed a few adjustments to work in our project. Being able to integrate something very powerful and suitable for the project in quite a simple way was a bless. To get the most out of the function, we created a specific back story for chatGPT as a base so it can really get into the character for the conversation. For example, the “brain” character has background story of being a brain to a now-dead person and feeling negative emotions such as sad and angry towards them. The result we get from each chatGPT-generated answer was, judgemental and full of sorrow.

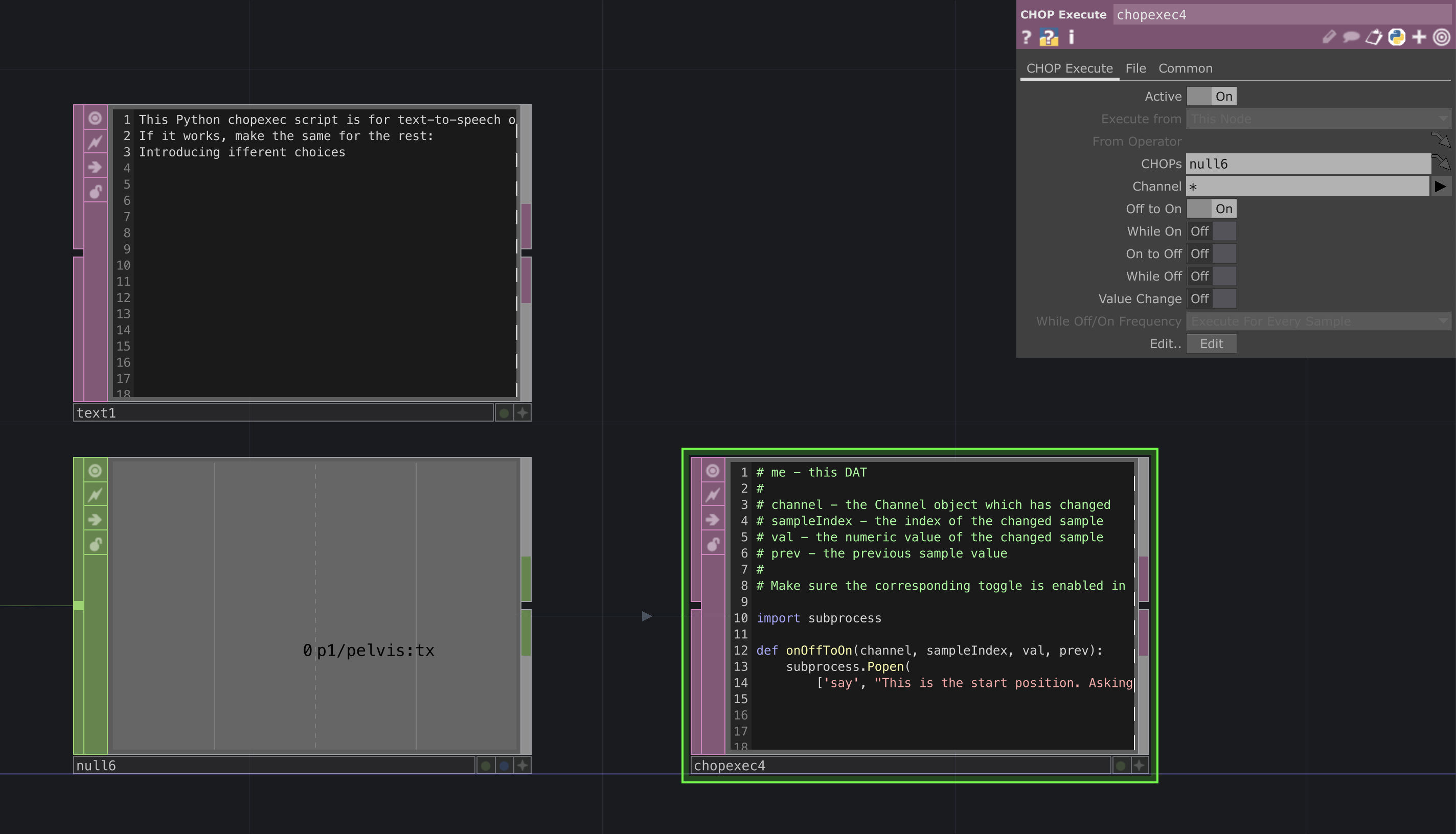

For voice-overs, 2 methods were tested out. First one was implementing Google Text-to-speech API inside Touchdesigner and having the audio internally generated. When it didn’t work so well, I ran into a random tutorial on Youtube that demonstrated using simple Python codes to generate the speech. Although having some limitations with adjusting the tone and stuff, it was the best one we got and worked perfectly in testing. However, we couldn’t get it working during the presentation as the Aalto internal desktop simply doesn’t have Python and we did not have time to ask for administration rights to install it.

The Gameplay

Knowing the importance of giving the right hook to the player, we need to find something that catch attention, direct and horrific, if necessary.

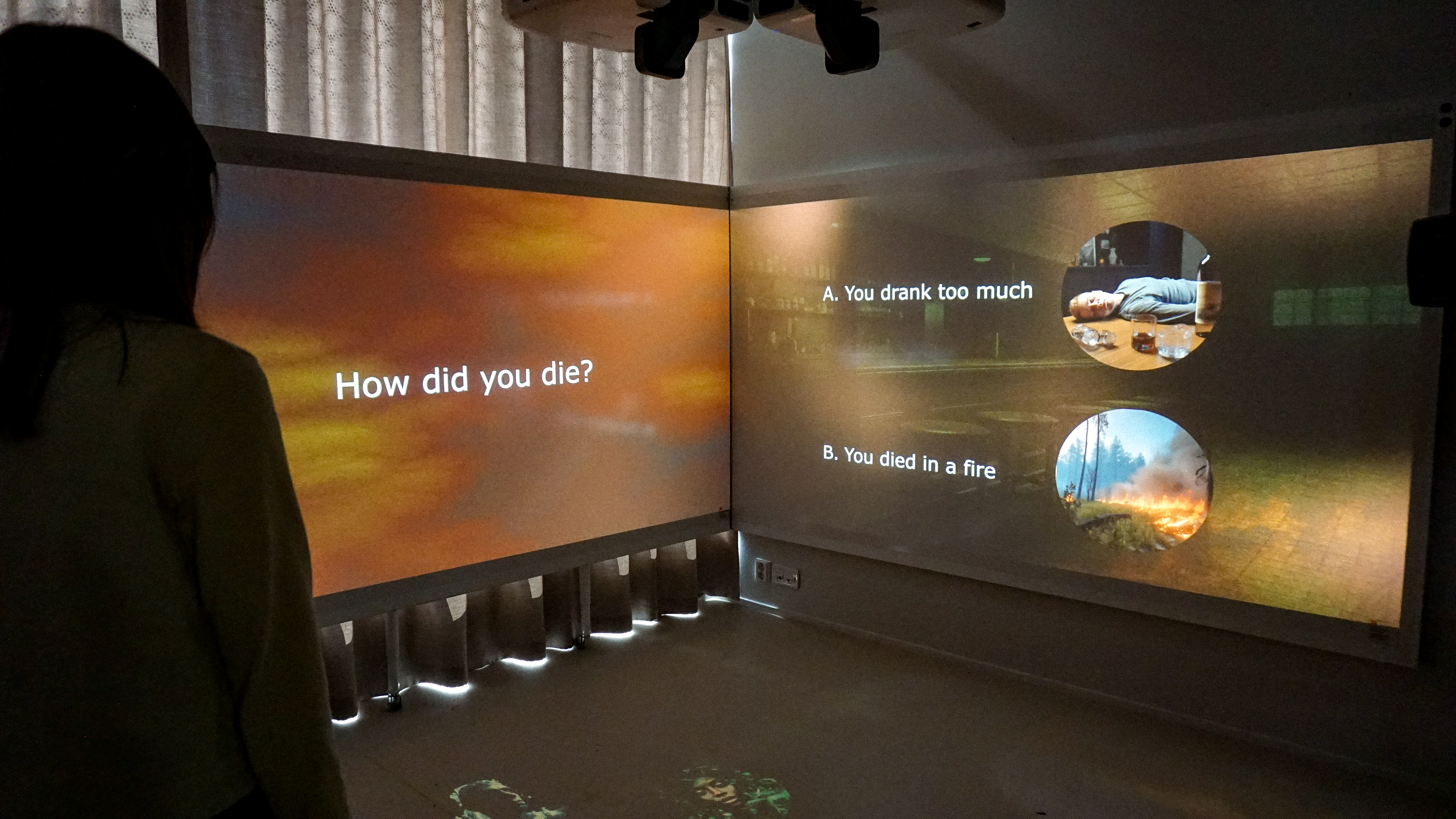

How did you die?

Was that catchy enough? We hoped so. A small trick was done to get people jumping on our “Start” button: instead of setting the parameters to catch the jumping movement of the body, which can be quite haslte as a small jump is not always clear to detetc, we put a small DELAY (Chop) before changing the sreen, and most peole would start jumping after 1-2s standing on the “Start” button.

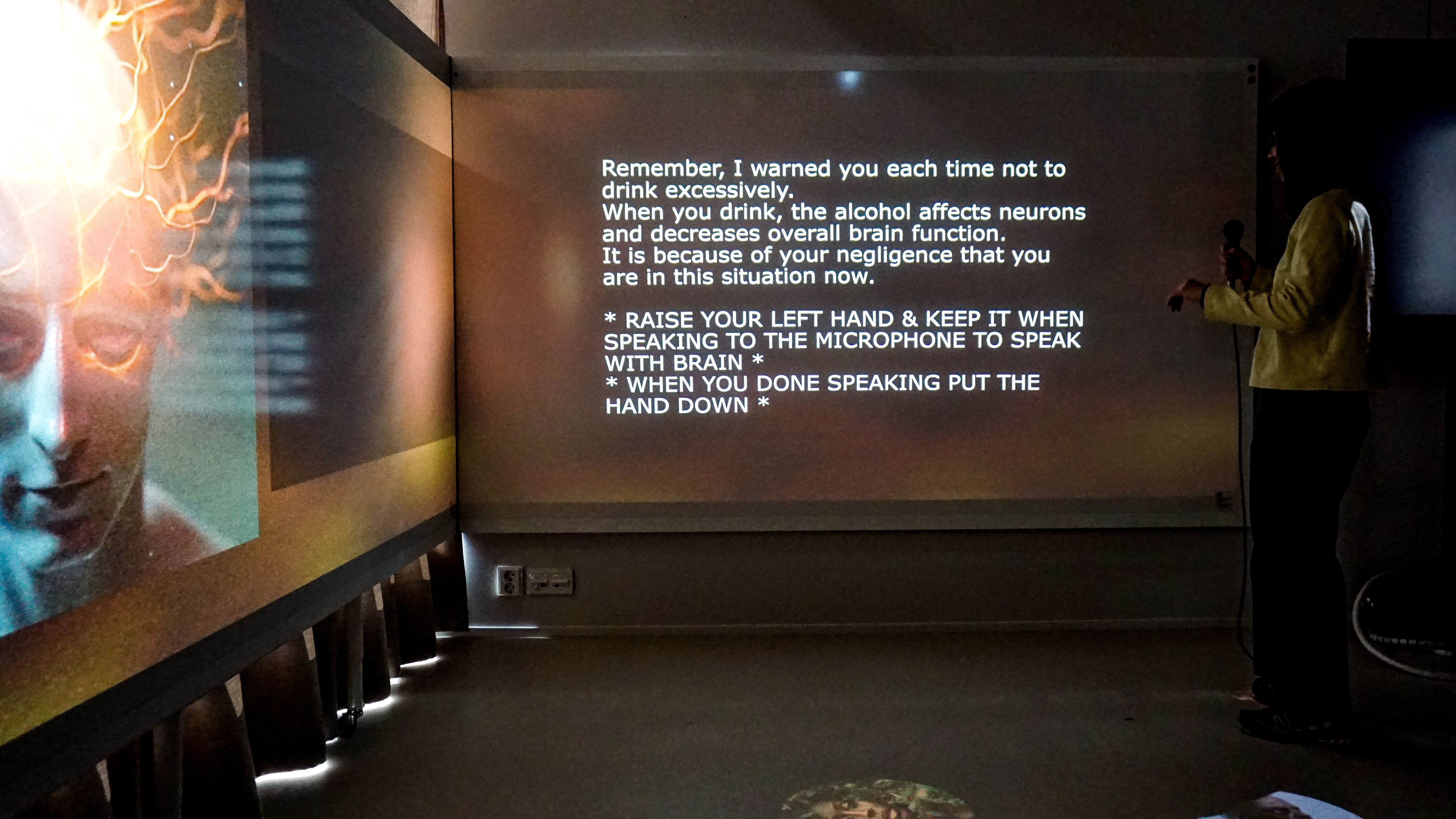

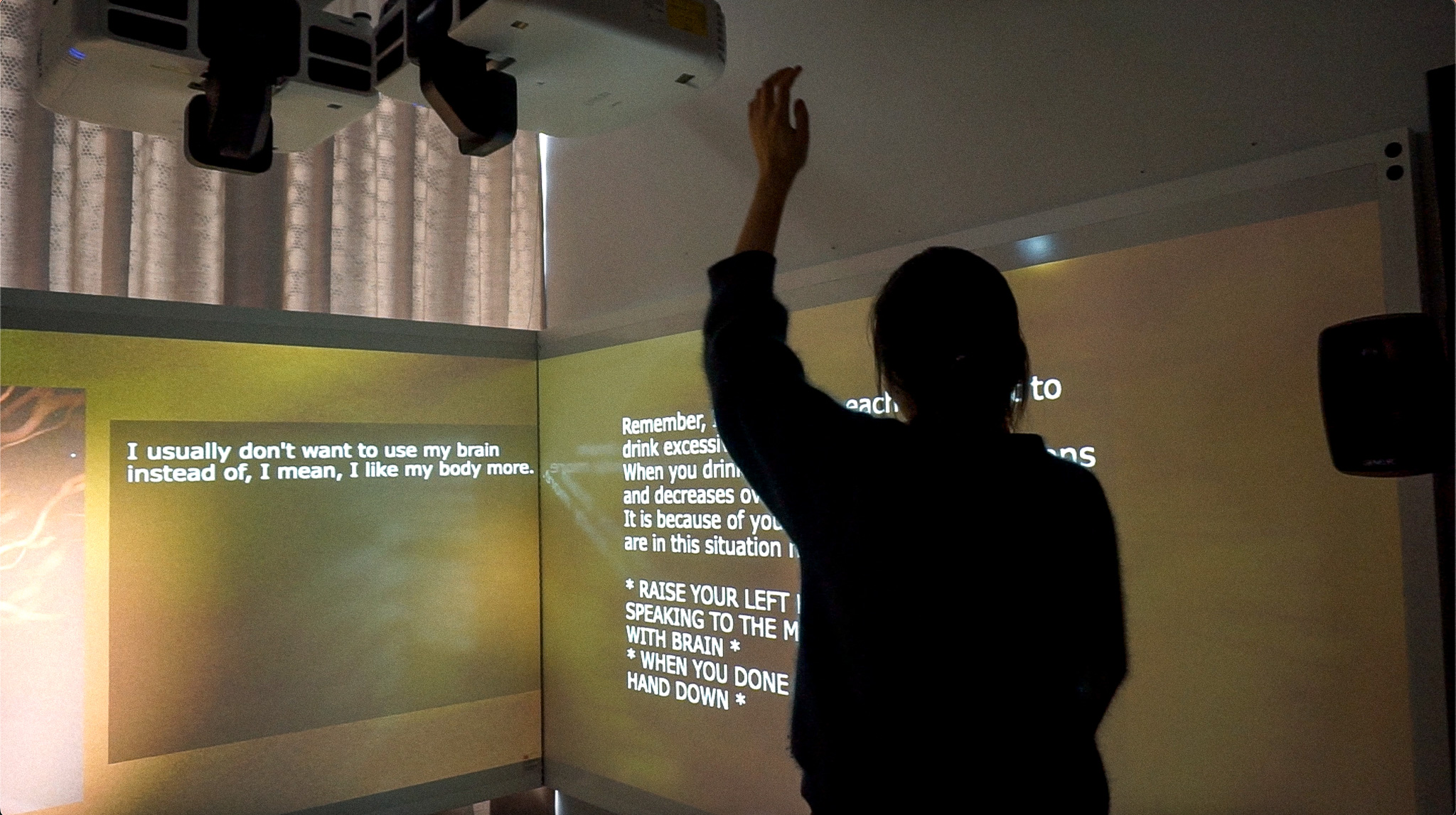

The participants would then navigate through the game with questions. Each answer is illustrated by an icon on the floor and the participant will chose by standing on it. The installation has 2 screens and each would show a different scenes that are related to each other: a set of question and answer options, storylines and their characters, or an animation of the characters speaking and its script.

In addition, we were able to include a nice little add to our project in the last few days: chatGPT conversation mode for our characters.

A stories is no more than a plain canvas without characters, so we designed AI-generated talking characters with distinguished personalities and backgrounds.

A body gesture that is familiar with almost all student was applied: the participant would raise their hand to “talk with the character”, the gesture would be detected as a signal to get the Touchdesigner’s Whisper to listen to their questions and send it to chatGPT to generate the answer.

Participants interacting with the character’s chatGPT conversation mode

After introducing the participants to the storylines and characters’ backgrounds, our game gives them the opportunity to interact and talk with them to maximize the user experience. This leaves a lot of room to play around and explore inside the game. Even we don’t know exactly the outcomes of each conversation because of the randomness of AI-powered chatGPT plugins and the unexpected turns of questions.

The making process

The making process was devided into 2 main parts: working with Touchdesigner and putting the installation altogether. During about 1 month, about 3/4 of the time was spent on learning Touchdesigner and trying to come up with the best solutions.

ChatGPT was consulted to integrating ChatGPT in Touchdesigner. Also some general questions about the softwares functions were shot at them. They did help in a way, although their step-to-step answers couldn’t be used literally, they gave some kinds of idea into how to feel the flow and solve the problems.

While most of our attempts on TD were failed, it was necessary to understand how the software works and make the most use of each function. The process also allowed me to learn some simple Python, which I’ve never worked with before, as well as getting familiar with installation devices and systems.

As it was both our first time to do an art installation from scratch, it was difficult to organise and get things going in the beginning. One of the most challenging parts was to setup the 3 projectors and their related accessories. It took sometimes to run around and asking for stuff but we were lucky to be able to gather enough necessary stuff. My body was sored for almost a week afterward though, but it was worth it.

More details on the development process can be found in the Learning Diary.

Limitation & Self-evaluation

Overall, the project has achieved what we planned initially and even exessed some of our expectations. We have overcomed some unconvenient issues as well, including the exhibition room were booked the previous day before the presentation. Although only had a few hours in the evening to finalize everything, we tried to make it work by testing what we could the days before asking permission to leave some stuff inside the room.

There are some aspects that can either be done better or fixed if there was more time for us with the room:

-

ChatGPT & Speech-to-text whisper: until the presentation day, we only got the AI-generated conversation working with 1 character. Due to some still-unknown problem inside the software, the function couldn’t be duplicated.

- Voice-overs: it was sadly that our Python-powered Text-to-speech couldn’t work with the school internal desktop as it doesn’t have Python installed. We were so caugh up with other setups to realize the problem on time. But it would be a quick fix if the project are to be reimplement again.

- Lack of sound affects: so far the only sound we have are the background music. Eventhough it was effective in terms of giving the right mood, we’d love some sound effects as well, for example when a button was stepped on or when a question was answered. This should also be an easy fix but we couldn’t manage due to the timing and our working capacity.

- Overbright lights: the blackout curtains in the room weren’t enough to keep the summer sunshine totally blocked away. We couldn’t present in the morning so the bright lights had quite bad affects on the visibility of the icons we have on the floor.

We were happy, anyway.

Demo Video

This video is a combination of demonstrating how the game would be played; various testings from our classmate, teacher and friends; and close-up of the settings we have before and during the play.

Thanks for reading!

And sincerely, many thanks to Matti, Eduard, Calvin, our classmates, Halsey, and the guys in Aalto Takeout. Nothing will be the same without any of you.